sourcediver.org

sourcediver.org

2024/06/28

When using an OpenAPI schema the other day I ran into discrepancies between what the schema describes and what the API actually validates. I began thinking about how such schemas are merely a model of the actual code that is used to deserialize / parse and validate the raw data. I will describe the problem and give a solution which allows to re-use the same logic across many platforms using WebAssembly. The post and solution is generic but the problem applies mostly to industry best practices around HTTP APIs.

An endpoint that is called with some payload or parameters usually has common steps until the actual transaction happens and the result can be returned.

a transaction together with its schema

Clients, especially frontend applications, often do some of the validation steps prior to calling the endpoint. The reasons why this is done vary. Users expect direct feedback whether their input into a form is valid and of course local evaluation is cheaper and faster than calling the service multiple times just to get the errors returned. Or take asynchronous requests where the actual result takes a long time to come back, like when deploying a config to a remote device that only polls every few minutes for updates.

Unless the client is using the same language / framework and a library can be shared, the validation steps are then often shared as a schema like OpenAPI (previously Swagger). These schemas can be written by hand which is expensive and error-prone or generated out of the code and types used in a request, which is propably the option most projects aim for. They are then loaded into a schema library that can then validate whether the input adheres to it.

In the picture above you see that the schema is describing the steps “Type Parsing” and “Business Validation” on the left. The different shapes indicate that the description is not exactly correct, here is why:

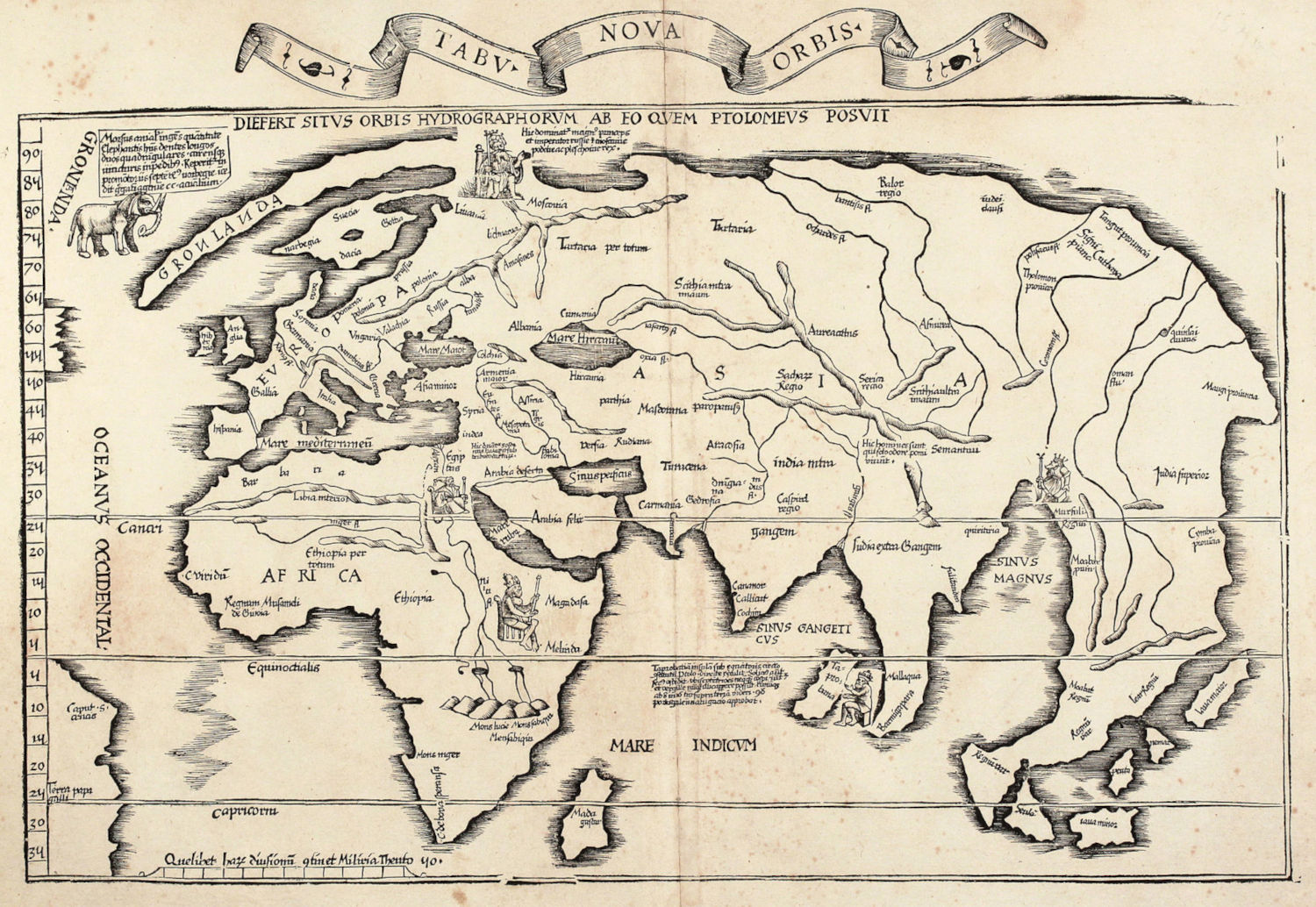

A model is a tool to capture the behavior of a given system. It usually reduces the complexity of the system but this simplification always involves some level of approximatation or uncertainty. Just as a map serves as a model of the territory, the schema helps us navigate and understand the API, but it never perfectly captures the complexity of the actual validation logic. In other terms a system built according to a model of another system will behave differently than the original system.

the map as a model of the world - world map by Laurent Fries

When the schema is generated from code and types, the equivalent schema expression is returned. This

feature is often provided by frameworks and third party libraries.

The equivalency is often assumed, not guaranteed, especially when custom code is written.

Especially when different libraries are used for each individual step, it is difficult to generate a

unified schema that represents the combined logic correctly.

One might use the favorite data type validation / deserialization (Rust: serde, Go: validator, JS: zod)

but then use some custom business validation code that is too complicated to express in types.

For the pseudo-rust code below, the generated schema just states string for hostname but in reality

slightly more complex validation happens afterwards.

#[derive(Serialize, Deserialize)]

pub struct CreateHostParams {

pub hostname: String,

pub ipv4: Ipv4Addr,

}

let banned_hostnames = Vec::from(["localhost", "batman"]);

let { hostname, ipv4 }: CreateHostParams = serde_json::from_str(json_data).unwrap();

if banned_hostnames.contains(&hostname.as_str()) {

return Err(BusinessValidationError {

message: "illegal hostname".to_owned(),

path: "hostname".to_owned(),

});

}{

"$schema": "http://json-schema.org/draft-07/schema#",

"type": "object",

"properties": {

"hostname": {

"type": "string"

},

"ipv4": {

"type": "string",

"format": "ipv4"

}

},

"required": ["hostname", "ipv4"]

}Note: This case can of course be improved by implementing the

Trait accordingly and specifying the forbidden hostnames. However

this is often forgotten or simply ignored to keep the codebase smaller / save costs.

The result might be that a frontend not only needs to use the generated JSON Schema but also re-implement the custom business logic: You will often find functions that are being run after the input has been validated against a schema to catch edge cases or business rules that are not modelled correctly.

This is not only expensive in terms of implementation effort but also error prone as the logic is often only documented in plain language.

But what if we put the common steps around data parsing and validation into a library and offer this to clients instead of a schema?

common steps exported as a library

As previously mentionend, this is only feasible when all parties are using the same language / framework. This is rarely the case and so we need some common ground. Like JavaScript, WebAssembly has already escaped the browser domain and you can run WebAssembly almost anywhere today. This is a perfect match in order to compile and distribute our validation steps in an ubiquitous assembly language.

As WebAssembly is only the instruction set and execution environment it does not offer any means

to move structured data into it. If you want to shuttle data into the WebAssembly environment,

you need to pass a pointer to it, which is then read and cast into the respective type

within the WebAssembly program. This differs for each language that interfaces with WebAssembly and

needs to be implemented accordingly. It is very much alike how FFI wrappers use

C/C++ library in other languages like Python, Go or Ruby.

While there is first-class Rust support for JavaScript environments, one might need

to write custom pointer magic to shuttle the data in and out for other languages.

For the approach described in this article, implementing a string is enough as everything can be deserialized from and to

it:

We can fully abstract our common steps and they can be represent like this:

fn parse_request_payload(value: &str) -> String;

fn validate_business_logic(value: &str) -> String;The result String is the serialized form of a type like

enum ValidationResult<T> {

Success { validated: T },

Error { error: String, path: Option<String> }

}These functions can then be used in any environment that is capable of running WebAssembly code. Within each function

the type parsing from str to the actual type occurs followed by all the custom code for the business

validation. If any state is required to run the business validation, this could also be passed as a second argument.

To make things easier for this blog post, we combine both steps into one as we expect all clients to go through

both steps.

fn parse_and_validate(s: &str) -> String;Let us dive into an example project that has Rust backend with a POST endpoint that accepts parameters to create a network host. If valid, the input data is returned together with an ID. This is an endpoint one encounters in any services that offers a CRUD interface.

#[derive(Serialize, Deserialize)]

pub struct CreateHostParams {

pub hostname: String,

pub ipv4: Ipv4Addr

}There are several business rules that are not reflected in the type:

localhost or batmanimpl CreateHostParams {

pub fn validate(&self) -> Result<(), BusinessValidationError> {

let CreateHostParams {hostname, ipv4} = self;

let banned_hostnames = Vec::from(["localhost", "batman"]);

if banned_hostnames.contains(&hostname.as_str()) {

return Err(BusinessValidationError { message: "illegal hostname".to_owned(), path: "hostname".to_owned() });

}

// non exhaustive, `is_global()` would be, but is unstable and requires nightly

if ipv4.is_loopback() || ipv4.is_broadcast() {

return Err(BusinessValidationError { message: "illegal ipv4 address".to_owned(), path: "ipv4".to_owned() });

}

Ok(())

}

}You can try implementing these constraints in JSON Schema however things become quite complicated when you try to auto-generate the schema from your code.

The types JsonRejection and BusinessValidationError both store the path where the error occured within the structure.

This makes it easier to map an error to an <input> field within a <form>.

pub enum JsonRejection {

JsonDataError { message: String, path: String },

JsonSyntaxError{ message: String },

}

pub struct BusinessValidationError {

pub message: String,

pub path: String,

}All these types and functions are put into a shared library.

There is a special method implemented for CreateHostParams that combines

everything a backend would do into a single function that can easily be exported

to WebAssembly.

impl CreateHostParams {

/// to be called by WebAssembly targets

pub fn parse_and_validate(s: &str) -> ValidationResult<CreateHostParams> {

match CreateHostParams::parse_str(s) {

Ok(validated) => {

if let Err(BusinessValidationError { message, path }) = validated.validate() {

ValidationResult::Error {

error: message,

path: Some(path),

}

} else {

ValidationResult::Success { validated }

}

}

Err(JsonRejection::JsonDataError { message, path }) => ValidationResult::Error {

error: message,

path: Some(path),

},

Err(JsonRejection::JsonSyntaxError { message }) => ValidationResult::Error {

error: message,

path: None,

},

}

}

}The whole project structure looks like this, the green boxes indicate that the shared code is used and executed by this component:

common steps exported as a library

You can find the full project on github.com/mguentner/webassembly_validation.

Check out the README within the project for instructions how to compile / run every component.

An axum handler then uses these functions directly.

async fn create_host(

AppJson(payload): AppJson<CreateHostParams>

) -> Result<impl IntoResponse, AppError> {

// Business validation

if let Err(err) = payload.validate() {

return Err(AppError::BusinessValidationError(err));

}

let host = Host {

id: 1337,

hostname: payload.hostname,

ipv4: payload.ipv4,

};

Ok((StatusCode::CREATED, AppJson(host)))

}main.rs

AppJson is a custom extractor that enhances the behavior of Json slightly

to make the result structured, instead of a plaintext string, and supply the

path.

struct AppJson<T>(T);

#[async_trait]

impl<S> FromRequest<S> for AppJson<CreateHostParams>

where

Bytes: FromRequest<S>,

S: Send + Sync,

{

type Rejection = AppError;

async fn from_request(req: Request, state: &S) -> Result<Self, Self::Rejection> {

let bytes = Bytes::from_request(req, state)

.await

.map_err(|_err| AppError::BytesRejection { message: "unable to extract bytes".to_owned() })?;

let body = shared::from_bytes::<CreateHostParams>(bytes.as_ref())?;

Ok(Self(body))

}

}The folder was created using wasm-pack-template and uses wasm-pack to create the distributable javascript library out of the rust codebase.

The core of it is lib.rs, where the validate_create_host_params function is compiled to .wasm.

Only a single line is needed to call the actual shared validation code, the other parts are

binding code. In other words only a handful of lines of code need to be maintained for a WebAssembly

target!

use shared::CreateHostParams;

use wasm_bindgen::prelude::*;

#[wasm_bindgen]

pub fn validate_create_host_params(s: JsValue) -> JsValue {

let as_str: String = serde_wasm_bindgen::from_value(s).unwrap();

let res = CreateHostParams::parse_and_validate(&as_str);

serde_wasm_bindgen::to_value(&res).unwrap()

}The previously created .wasm file is included in the package.json of a React application through

the generated library.

"dependencies": {

"react": "^18.3.1",

"react-dom": "^18.3.1",

"wasm_validation": "file:../wasm_validation/pkg"

},A wasm loader plugin is necessary for vite but this is easy to include.

export default defineConfig({

plugins: [

react(),

wasm(),

topLevelAwait()

],

})vite.config.ts

The function itself can be imported and called just like any other Type/JavaScript function:

import { validate_create_host_params } from "wasm_validation";

type Error = {

type: "Error";

error: string

path: string | null;

}

type Success = {

type: "Success";

validated: object;

}

type Result = Success | Error

const res = validate_create_host_params(value) as Result;The content of a <textarea> is now checked and once Success is returned, the application

allows to send the request to the backend.

Since the path is returned, one can also attach the error to the corresponding <input> in

a structured <form>.

🎉 Success: The backend and the frontend execute the same code parts to deserialize the type (serde) and to validate the

business logic.

But what about other platforms? To demonstrate, I have implemented a client in Golang that uses the wazero WebAssembly runtime. The code follows the allocation example.

For this, a slightly different WebAssembly target is needed as the binding for JavaScript obviously won’t work.

fn validate_create_host_params(value: &str) -> String {

let res = CreateHostParams::parse_and_validate(value);

serde_json::to_string(&res).unwrap()

}

#[cfg_attr(all(target_arch = "wasm32"), export_name = "validate_create_host_params")]

#[no_mangle]

pub unsafe extern "C" fn _validate_create_host_params(ptr: u32, len: u32) -> u64 {

let payload = &ptr_to_string(ptr, len);

let result = validate_create_host_params(payload);

let (ptr, len) = string_to_ptr(&result);

std::mem::forget(result);

((ptr as u64) << 32) | len as u64

}Along with this function, several helper function are needed for allocation and deallocation. For documentation, please refer to the upstream example code

The .wasm is produced by the command cargo build --release --target wasm32-unknown-unknown and

the resulting .wasm file is place into the go-client project root:

cp target/wasm32-unknown-unknown/release/wasm_validation_nojs.wasm ../go_client/.Now to the Golang code.

The whole function should be wrapped in order to abstract away the allocation / deallocation of memory that is used to shuttle data in and out of the WebAssembly runtime. The signature of this function is simple. Golang types can simply be marshaled into strings in order to call this function.

func validate(payload string) (*string, error)Calling this wrapper function looks like this.

localhostPayload := `{

"hostname": "localhost",

"ipv4": "192.168.13.37"

}`

res, err = validate(localhostPayload)

if err != nil {

log.Panicf("validate paniced with %v", err)

}

log.Printf("Validation result for payload with localhost: %s", *res)The reduced wrapper function:

//go:embed wasm_validation_nojs.wasm

var validationWasm []byte

func validate(payload string) (*string, error) {

ctx := context.Background()

r := wazero.NewRuntime(ctx)

defer r.Close(ctx)

mod, err := r.Instantiate(ctx, validationWasm)

if err != nil {

return nil, err

}

validate := mod.ExportedFunction("validate_create_host_params")

allocate := mod.ExportedFunction("allocate")

deallocate := mod.ExportedFunction("deallocate")

// boring pointer magic

ptrSize, err := validate.Call(ctx, payloadPtr, payloadSize)

if err != nil {

return nil, err

}

// more pointer magic for resultPtr & resultSize

bytes, _ := mod.Memory().Read(resultPtr, resultSize)

result := string(bytes)

return &result, nilUpon executing the binary, we receive the following output:

Validation result for {}: {"type":"Error","error":"missing field `hostname` at line 1 column 2","path":"."}

Validation result for payload with localhost: {"type":"Error","error":"illegal hostname","path":"hostname"}

Validation result for complete payload: {"type":"Success","validated":{"hostname":"foobar","ipv4":"192.168.13.37"}}

Rust Backend POST request completed with status: 201 - body {"id":1337,"hostname":"foobar","ipv4":"192.168.13.37"}This post demonstrates how to re-use backend validation code with WebAssembly instead of using fuzzy schemas that do not fully capture the behavior of an API perfectly. Especially with Rust, this was relatively easy given the number of languages and systems involved. It was satisfying to see the same output of a Rust backend being rendered by a React and a Golang application without re-implementing a single line of code. I can imagine using this approach in production, but would only recommend it if you have total control over your clients or are dealing with other teams and users who are ready for it.