sourcediver.org

sourcediver.org

2017/01/18

In a comment on GitHub the question was asked why one should care about IPFS and the integration of the technology into Nix. I want to answer this question with this blog post.

First of all, let me rephrase the question to a “Why should we care about IPFS?” instead of “Why should I care?” since this question concerns all users of the very centralized inter-net. That also includes users and developers of Nix(OS).

Currently almost the whole infrastructure of NixOS relies on private companies that offer particular services. These include

It perfectly mirrors the current state of the Internet. If you need a solution for X, there is most certainly a private, (closed source) “cloud” for it. But remember! There is no cloud - there are just other people’s computers. In most cases you are not able to replicate the behaviour of these systems yourself. In other words, you can not run a S3 in your basement and you can not fix bugs within the issue system of GitHub yourself. Thus you have lost control over the service or product you are providing because you can not fix all problems within the infrastructure. Third party dependencies also raise the bar for developers that want to contribute by maintaining a fork of a project.

From a risk management and complexity point of view, the dependencies of an infrastructure for developing, distributing and maintaining open source software should be kept at a minimum. Of course this is not always possible without making a compromise in order not to jeopardize the final goal. That means GitHub might be still the best choice if one wants to build software at a large scale in different timezones on different continents with 1000+ contributors.

Now you might interrupt me and try to convince me that Amazon is providing one of the best storage concepts and Cloudfront a decent CDN and it’s very hard to serve big files globally without spinning up servers on every continent which is very expensive.

This is very true - until now.

IPFS aims to create the distributed net. In other words:

Once you add a file to IPFS, it can be addressed by its hash, thus by the content and not by the location. Think of IPFS as Bittorrent + Git (Merkle DAG) put together.

Have a look at the frontpage of ipfs.io for a short summary.

Please bear in mind that IPFS is still alpha software at the point of writing and the performance is not that great yet. NixOS would be an early adopter.

(IPFS features much much more, have a look at IPNS once you get the idea of IPFS)

NixOS currently uses a centralized storage concept from the perspective of a user. If one wants

to upgrade an installation, Cloudfront will serve the .nar files using their CDN.

However, there is almost no way to effectivly cache files for other machines that need to fetch

the same .nar files in the future. If cache.nixos.org is currently not reachable for you that

also means that you can not perform any updates or you need to recompile everything yourself.

With IPFS, any machine that has the .nar pinned or not yet garbage-collected would also redistribute

the .nar files while making sure that the files stay intact through the hash.

That means if the initial fetch would maybe saturate the downstream of your internet link but any

following request from within your network would be performed with whatever your internal network allows.

That means…yes, in theory, once the performance of IPFS has improved, doing a nixos-rebuild switch

at gigabit speeds is entirely possible.

You can even update if your internet link goes down!

Now comes the fun part…

Currently when building, Nix fetches the sources from different providers. That can be a git repository on

GitHub or on some server that the sole developer of the library operates.

Maybe it’s a .tar.gz that is hosted by several universities around the world…or on a single ftp server that

goes down from time to time.

When writing a derivation, the IPFS hash could simply be included in src along with the

sha256 of each package and Nix would simply fetch the source using IPFS and rehost it.

As long as a single copy is reachable through IPFS, an outage of GitHub or an unreachable / offline ftp would

not break nixpkgs! It would be even possible to keep the source packages for a long time (read: an archive).

This would make it possible to build very older versions of nixpkgs even after the files have been

removed or the domain just changed (may useful when doing a git bisect on nixpkgs).

This is a seperate topic, I will only write about the binary distribution in this post…back to topic!

IPFS provides a lot of room on how to use it for NixOS as it is “just” a protocol / linked data structure. Everything is possible starting with an approach that is limited to content distribution and ending with integrating it deeply into Nix. However, as stated before, IPFS is still in an early stage. A lot of details are still changing and the performance is not stable enough to operate it on every machine yet (i.e. replacing http with IPFS).

Therefore a roadmap for IPFS and NixOS is needed.

Stage 1 still needs some work but should be deployable within the near future. Stage 2 and 3 are hypothetical ideas.

Also, the trivial approach. IPFS is not integrated into Nix on a conceptual level at all.

It’s just another binary cache from which Nix will fetch the .nar files and quite possibly

the .narinfo files as well. That means it can be used along with https://cache.nixos.org

or any other binary cache.

A local IPFS daemon can be used but is not needed as everything is possible using IPFS-HTTP gateways.

That means a dedicated machine could be used per network or simply a public gateway (there are

currently a few out there but the NixOS project could run one of their own).

.nar files are mounted directly into the /nix/store using fuse.

It makes sense to have IPFS running on each machine but everything would still

work with gateways.

Each /nix/store path is directly fetched through IPFS (i.e. without the intermediate .nar mounting

and/or extraction). That would require a local running IPFS daemon with the upside

that each machine (if enabled) would redistribute certain paths of the /nix/store.

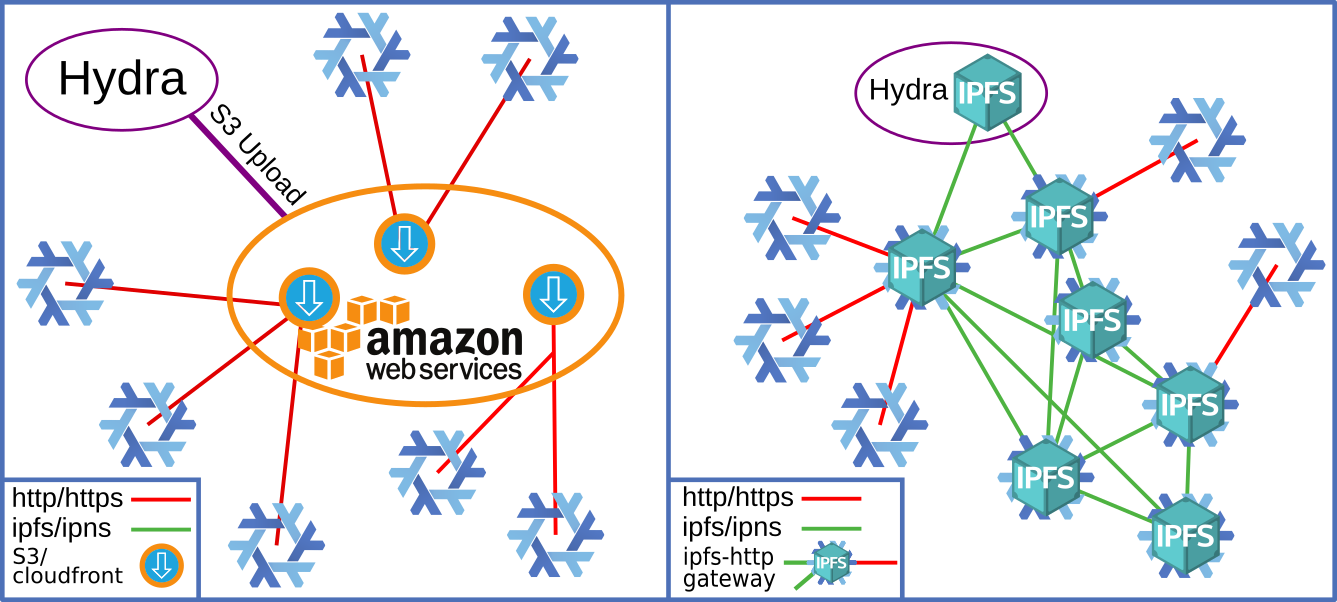

Here is a colorful drawing that shows the status quo on the left and a possible future state on the right.

the status quo compared to how it would look like with IPFS

.nar files that

the Hydra produces.If you want to contribute to the discussion, please reply in the following threads:

Update 2017/02/06:

I moved everything over to https://github.com/NixIPFS

Update 2024/02/21:

This is an old post and has been restored for reference. I wrote a comment on GitHub were the experiments laid out in this post went. An RFC has been accepted for Nix itself. A tracking issue on Github exists for the main effort of supporting systems like, but not limited to, IPFS.